SEO and web development go hand in hand. While often overlooked, a healthy on-page foundation can either be an absolute blessing or a hindrance holding back every piece of content and ranking from its true potential.

It’s a big deal.

Great SEO web development can be the difference between #1 rankings and endless frustration over low sitewide organic traffic.

How does your site hold up? Are you making errors that put you at risk for harsh Google penalties? Are you turning a blind eye to sitewide issues that could be setting you back?

No need to worry. This article will do a deep dive into some of the most common on-page SEO sins and how a web developer or web designer can correct them.

By the end, you’ll have the SEO for web developers basics down pat AND know how to avoid some of the lurking landmines you could fall victim to.

SEO for web developers basics

Mobile Friendliness

Mobile traffic is at an all-time high. According to BroadbandSearch.net, mobile traffic accounts for over 50% of total traffic. Back in 2013, that number was around 15%. And it doesn’t appear to be slowing down.

Over the last few years, Google has prioritized mobile-first indexing for over 70% of websites. And as of March 2021, Google will switch all sites over – whether they’re prepared or not.

In a nutshell, mobile-first indexing is when Google crawls the mobile version of your website and makes that the primary version. If you have an unresponsive, mobile UNfriendly website, Google probably won’t display your pages as often in search results.

In this day and age, having an unresponsive mobile website is a recipe for disaster. It means over 50% of your users are most likely going to have a poor user experience.

Even if your website is responsive, many other mobile-related issues could damage your SEO:

- Mobile pages with distracting popups that interfere with the content

- Mobile pages that display different content than the desktop version

- Poor website design that makes it difficult for users to navigate the site

- Overall page speed (we’ll discuss this later)

Duplicate Content

The two most common types of duplicate content web developers face are product descriptions, URL parameters going astray, and leaving out canonical tags when they’re needed.

Product Descriptions

Usually, the manufacturer gives the retailer or distributor a standard product description. Unfortunately, because that same product is sold by hundreds (if not thousands) of different websites, it results in lots of duplication.

To Google, this screams, “lazy site owner who doesn’t want to provide unique, high-value content to its users.”

Additionally, product pages often have the same information for each product. Things like:

- General company information

- Return policy information

- General shipping information

These are essential details, but having large chunks of information duplicated across hundreds of pages isn’t ideal for SEO. Instead, you should have dedicated pages for users who want to know more.

URL Parameters

URL parameters from click tracking, analytics code, or product category filtering can often create multiple versions of the same page, which looks like duplicate content in the eyes of Google.

For example, let’s say www.shoes.com/soccer-shoes?color=blue is a duplicate of www.shoes.com/soccer-shoes?color=red, which is also a duplicate of www.shoes.com/soccer-shoes?limit=20&sessionid=123.

This would be duplicate content. As you can see, if replicated hundreds of different times for every product on an eCommerce site, it would look very sketchy.

Google does a great job of filtering out any URL parameters from their index, but they aren’t perfect.

The official recommendation from Google is to either avoid using URL parameters or use their URL parameters tool if both of the following apply:

- Your site has over 1,000 pages, AND

- Google has indexed a large number of duplicate content pages resulting from URL parameters.

You can confirm all of this with Google Search Console.

Canonical Tags

Anytime you know you’re going to be engaging in duplicate content, the easiest solution is to add a canonical tag. It tells Google, “I’m aware this page has duplicate content – here’s where the original is.”

If you’re not familiar with canonical tags, be sure not to confuse them with WordPress tags, as they’re much different. Canonical tags are a snippet of code placed in the header of a page.

Canonical tags are often needed but seldom used when:

- Duplicating a landing page for a pay-per-click advertising campaign

- Publishing someone else’s article on your blog

Site Speed

Site speed is becoming a critical SEO metric. Google recently announced that their Core Web Vitals would be an official ranking signal as of May 2021.

Before this, Google considered page speed a ranking factor, but it wasn’t nearly as in-depth.

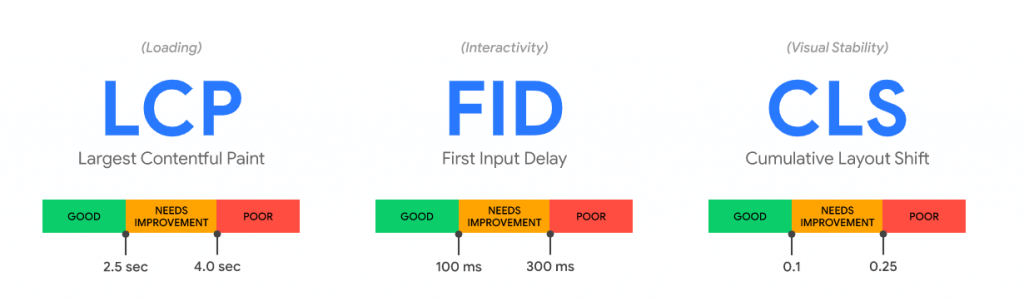

Google’s Web Core Vitals consist of three different page speed metrics:

- Largest Contentful Paint (LCP): How many seconds it takes for a page’s largest element to be displayed.

- First Input Delay (FID): The time it takes from when a user interacts with the page to when the website is actually able to process their action.

- Cumulative Layout Shift (CLS): A score Google assigns to a page based on layout shifts that occur in that page’s lifespan.

Google measures these on the following scale:

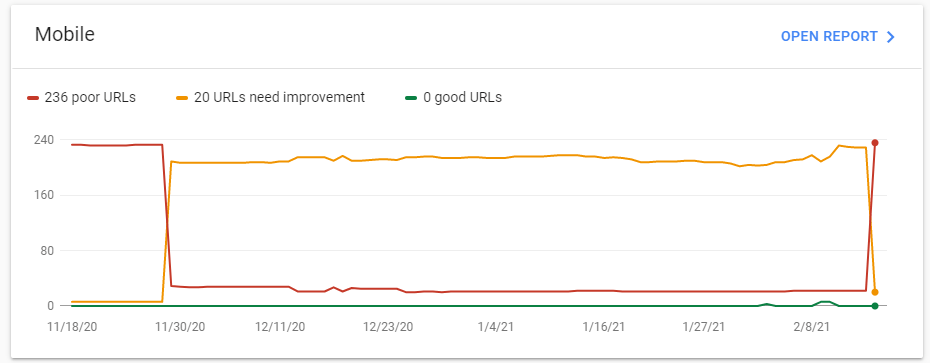

To see these stats for any particular page, you can use PageSpeed Insights. For a sitewide overview, you’ll need to use Google Search Console:

Page speed is important because of how much it affects the user’s experience.

If the Largest Contentful Paint takes seven seconds, a user will perceive the page as “slow” and might exit in favour of a faster site. If the First Input Delay is multiple seconds, a user might need to click on something numerous times, which can be frustrating. And if the site has a low Cumulative Layout Shift score, it could cause them to click something accidentally.

Now imagine those issues across every page on an entire website. It adds up.

Page speed is something web developers should think of at every stage of the website build. For example, if you’re starting with a WordPress theme, be sure to pick the one with the highest Web Core Vitals score to minimize the time it takes to optimize.

If you want to add a specific plugin (a chatbot, newsletter script, etc.), look at how those plugins affect your page speed.

Additionally, make sure you have a reliable, preferably dedicated, website host. Upgrading your managed WordPress hosting is one of the easiest ways to increase page speed dramatically.

User Experience

SEO specialists often undervalue the importance of user experience, but that’s certainly not the case with Google.

For Google, user experience is everything.

- Are users finding what they’re looking for?

- Are users annoyed or frustrated by something a brand is doing?

- Are users not getting their questions answered?

- Do users feel they’re receiving information they can trust?

- Can users contact brands with questions or concerns?

- Can users seamlessly navigate through a site?

- Do users feel they’re being provided valuable (and unique) information?

User experience is a nuanced topic with no clear-cut solutions, but all web developers and designers should keep it in mind.

Here are some tips to help:

- Ensure your content is segmented, so it’s hyper-relevant to your target audience.

- If you want #1 rankings, create content that deserves to be rank #1.

- In terms of design, put yourself in the shoes of your target audience.

- Keep things simple.

- People prefer transparency.

Robots.Txt

A robots.txt file is essentially a map that tells search engines where they can and can’t go.

While it’s not vital for your site to have one, it’s recommended, especially for large websites that require a complex SEO web development.

A robots.txt file can:

- Keep individual sections of a website private (staging sites, forums, etc.).

- Prevent server overload.

- Prevent search engines from crawling internal search results.

- Prevent certain types of media (images, videos, etc.) from appearing in search results.

To learn more, check out Ahrefs guide on robots.txt.

Staging Site Issues

Staging sites are essential for testing websites in a real environment. However, many web developers aren’t aware of the negative impact it has on SEO – or how to resolve it.

Crawling & Indexing

It’s vital that Google does NOT index the staging site. If it does, Google may opt to show the staging site in search results instead of the real one. It could also cause massive issues with duplicate content.

There are many ways to block Google (and other search engines) from crawling and indexing a staging site.

- A robots.txt file

- Add a no-index tag in the metadata

- <meta name=”robots” content=”noindex”>

- Password protect it

For staging sites, Google recommends using password protection. That way, there’s zero chance of Google crawling (or indexing) the site because it can’t see the content.

Images

Many web developers, web designers, and site owners don’t use images as efficiently as they should.

Resolution

Be sure to size images according to the section they’re being placed in. For example, it wouldn’t make sense to have a 2000 x 3000-pixel image in a 200 x 300 section. By resizing the image, you can save some processing time and increase page speed.

Image Format

Don’t use a PNG format if the image doesn’t have transparency – it tends to be a larger file size, which hurts load times.

Alt Text & Title Text

Use them! Text helps Google understand what the image is, and it gives search engines more information to gauge the content relevance (and quality).

However, don’t keyword stuff. Be natural. For every image, pretend that you’re describing the image to someone.

File names

Make sure your file names are relevant. Avoid general file names like IMG00295810.jpg.

Instead, be as descriptive as possible without it getting too lengthy. Husky-eating-bone.jpg is an example of a suitable file name for an image of a husky eating a bone.

Semantic Markup for Images

Google doesn’t index CSS images, so ensure all images are HTML, like this: <img src=”husky-eating-large-bone.jpg” alt=”An adult husky eating a large bone” />

Open Graph

Most social sharing websites allow you to choose which image is displayed when it’s shared by adding a line of code to the header, like this: <meta property=”og:image” content=”https://example.com/link-to-image.jpg” />

Google Search Console

Google Search Console, formally known as Google Webmaster Tools, is a web service that allows site owners to monitor, maintain, and optimize their website’s Google presence.

Google Search Console can give you critical information about how your website performs on the largest search engine in the world. It can point out site errors, indexing issues, security issues, manual penalties, load times, mobile usability, and much more. If Google doesn’t like something, it’ll tell you.

Having a clean bill of health on Google Search Console is something every website owner and web developer should strive for.

SEO & Web Development Conclusion

SEO web development is tedious work. It’s not easy, but it’s necessary and paves a path for your content to shine above the competition.

To keep track of your work, it’s best to use SEO reporting software, like Ahrefs. Ahrefs allows you to run in-depth on-page audits, which provides you a “health score” from 1-100, along with a list of action items.

For maximum results, start with the easiest issues affecting your entire site. Tackling these first will result in a large improvement of your health score and provide a nice incentive to continue with the more tedious changes.